Computers have been getting more powerful and faster for a long time, and they will continue to do so. Since the 1890 US census, when electromechanical tabulating machines were employed to count demographics data using punched cards, computer capabilities have seen exponential growth. This growth spans five computing paradigms: electromechanical, relays, vacuum tubes, transistors, and integrated circuits – going back much further than the 1965 formulation of Moore’s Law by Gordon Moore that applies only to integrated circuits. Even world wars, the Great Depression, and the boom and bust of the internet didn’t break the trend. In addition to computing power, many other capabilities of computers see exponential growth – disk drive capacities double every 18 months, and pixels per dollar in digital cameras double every year. While improvements in silicon integrated circuits will almost definitely end (as shrinking silicon transistor parts becomes impractical or impossible), another computing paradigm will take over where 2D silicon chips leave off. Several different potential paradigms are well poised to take the next step. For example, promising work has been conducted with 3D chips, optical computing, carbon nanotube computing, and other forms of molecular computing. Nevertheless, experts have consistently warned that the end of Moore’s Law could be just around the corner – even Gordon Moore himself predicted only that the trend would last 10 years.

Computer processing power (measured in FLOPS) has increased smoothly for over a century. Note the y-axis is on a log plot, indicating the slight upward curvature actually implies slightly more than exponential growth. Image from Kurzweil, Ray.

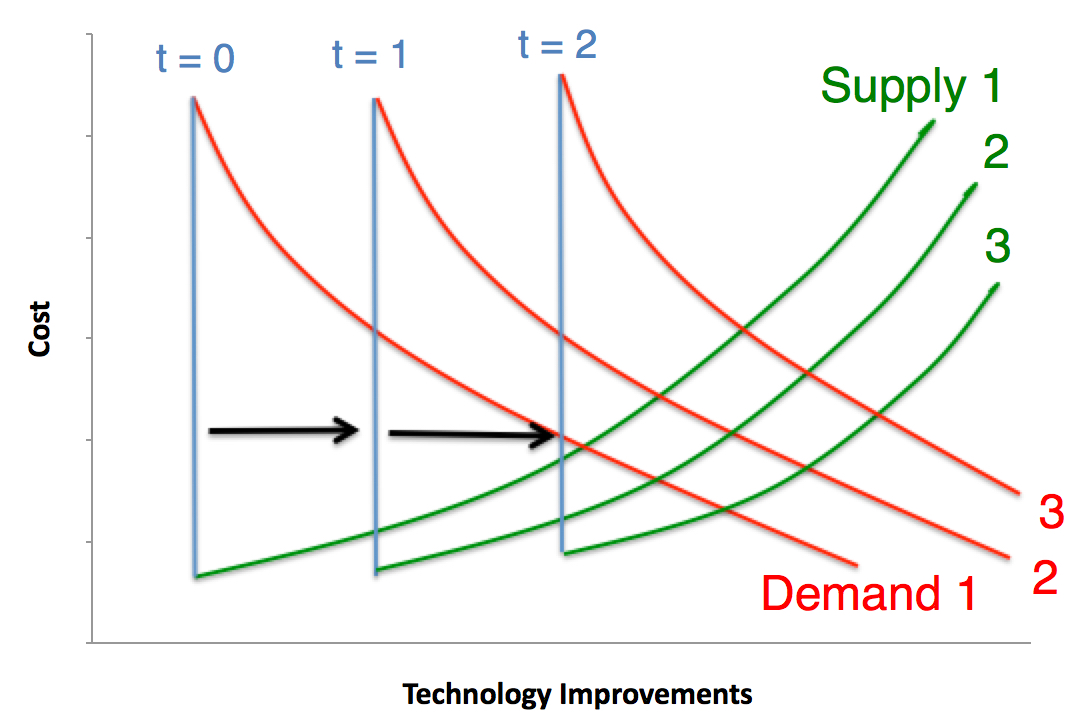

Continual cries about plateauing computational capabilities are sensible on the surface, since most technologies see a period of brief but rapid growth, followed by much slower growth. This general phenomenon can be explained with simple economics. When a technology is new, there are often problems with it, so there is large demand for improvements. At the same time, the cost of supplying a marginal improvement to the technology is low, since there is low hanging fruit. When the demand for marginal improvements of a technology outstrips the cost of supplying this improvement, the technology faces rapid growth. As the technology improves, however, there is an increase in the cost of supplying a marginal improvement to the technology (since the “easy” gains have already been achieved) and the demand for marginal improvements decreases (since the technology can generally fulfill all of its applications adequately), so gains become much smaller. At a certain point, the cost of improving the technology may outstrip the demand for any improvement, and improvements stop altogether. Improvements in the technology only pick up again if the costs to these improvements are decreased below the demand (say, by improvements in other technologies).

For most technologies, demand for improvements initially outstrips the cost (supply) of providing those improvements. As the technology gets better, this demand decreases and cost increases. The technology then improves slower.

Computers, however, are unusual in a number of regards. As computers improve, new capabilities are constantly realized. We weren’t buying iPhones for $10M 20 years ago, which is around what the computing power would have cost. As there are constantly new uses for improved computers on the horizon, the demand for marginal improvements in computers stays high. Today, we see demand for improved computer power for several applications, including AR/VR and Big Data. On the supply side, we use computers to create the next generation of computers, creating a positive feedback loop. We wouldn’t be able to create next decade’s computers without this decade’s computers. Consider the analogy of picking low hanging fruit from a tree, then using the branch that was attached to this fruit to knock down higher, out of reach fruit. The branch attached to that fruit can then be fastened to the first branch to reach even higher fruit, and so on. Basically, the cost to supply marginal improvements in computers does not explosively increase the way it does for most technologies. This feedback loop is unusual in how strong it is – lightbulbs aren’t really used to make better lightbulbs, even if they do allow scientists to stay up slightly later and work a little bit harder. Since the cost of marginal improvements in computers doesn’t systematically increase the way it does for other technologies, and the demand doesn’t decrease, computers are perpetually “stuck” in a condition that is more reminiscent of a new technology – leading to long-standing exponential growth.

As each generation of computers is essential in creating the next generation, improvements in computers push the supply curve of further improvements to the right. Demand for further improvements increases as new applications appear on the horizon. Computers are therefore constantly kept in a state somewhat similar to new technologies and experience prolonged exponential improvements.

The implications of exponential growth for computers go beyond the computer industry itself. Other fields and industries that are heavily dependent on computer capabilities can likewise see exponential growth. For instance, in the decades to come, we will likely see exponential gains in the fields of computational nanotechnology, computational neuroscience, and bioinformatics. Each of these fields has the potential to be transformative to society at large. Unfortunately, culture is often slow to catch up to technology, and so the number of people going into those fields is probably disproportionately small compared with how important these fields will become. Students trying to have a particularly large impact might have a better chance in one of these fields than in other related fields that don’t rest on computer capabilities.

Enjoy this post?

Join the Thinking of Utils newsletter and get new posts emailed directly to you:

One Comment